Subjective Comparison of Video Matting Methods

In this post we briefly describe how we used Subjectify.us platform to carry out subjective comparison of video and image matting methods. In the last section we show study results and discuss them.

Introduction

Formally, matting is an inverse alpha-compositing problem: i.e., given image or video \(I\) we want to decompose it to foreground \(F\), background \(B\) and transparency map \(\alpha\) such that: $$ I = \alpha F + (1 - \alpha) B. $$

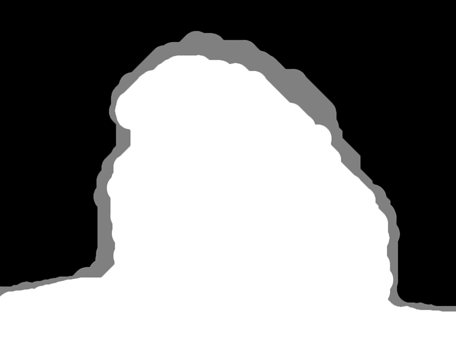

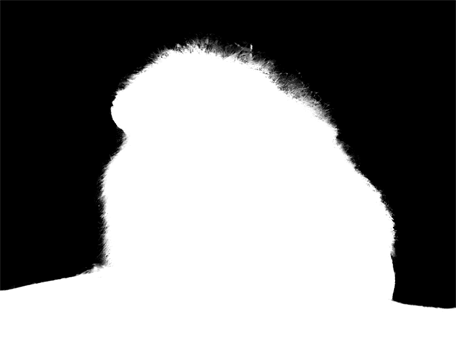

This problem is of high importance for a number of image and video editing tasks (e.g. background replacement, applying a visual effect to a single object only, etc.). The matting problem is clearly ill-posed. However, it can be solved with additional assumptions about natural images structure and additional user-input. One of the most widely-used types of such input is trimap (map with three types of labels: background, foreground, unknown). Example of matting input and output data is shown below.

|

|

|

|

|

|---|---|---|---|---|

Both image and video matting are well-studied research areas, thus there exists number of methods (Wang, Cohen, & others, 2008). In this study we wanted to find out which of matting methods delivers results with the best visual quality. The one can solve this problem by comparing output produced by these methods with ground-truth using objective metrics (e.g. SSD). Example of such comparison can be found at AlphaMatting.com and VideoMatting.com. Unfortunately, none of existing objective quality metrics has perfect correlation with human perception. Thus, we used Subjectify.us platform to carry out subjective comparison of matting methods.

Data Preparation

We used dataset from VideoMatting.com in our comparison. Dataset consists of 10 video sequences. For each video sequence it includes three trimap sequences of different accuracy level: narrow, medium, wide. We applied 13 video and image matting methods to all trimap levels of each video sequence. Foreground objects extracted by matting methods were composed with checkerboard pattern and resulting videos were compressed with x264 software encoder. You can view these videos online. Besides results generated by matting methods, we also included ground-truth results in the set of videos for comparison and uploaded it to Subjectify.us platform.

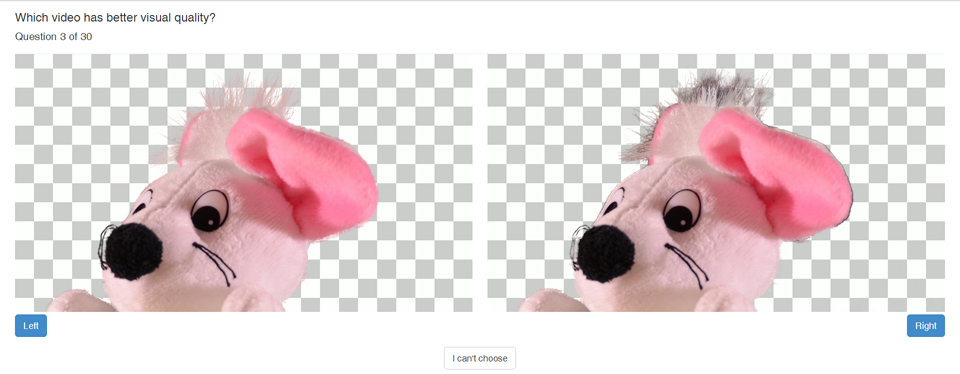

Perceptual Data Collection

Subjectify.us platform hired study participants and showed them videos from the provided dataset in pairwise fashion. For each pair, participants were asked to choose the video with better visual quality or indicate that they are approximately equal. Below we show user interface that was displayed to study participants.

Each study participant compared 30 pairs including 4 hidden quality-control comparisons between ground truth and a low-quality method; answers of 23 participants were rejected, since they failed at least one quality-control question. In total 10556 answers from 406 participants were collected.

Results Analysis

Subjectify.us platform converts pairwise comparisons to final subjective ranks using Bradley-Terry (Bradley & Terry, 1952) and Crowd Bradley-Terry (Chen, Bennett, Collins-Thompson, & Horvitz, 2013) models. The study report generated by the platform is shown below.

Expectedly ground-truth has significantly outperformed all matting methods despite of trimap level. Recently proposed Deep Matting (Xu, Price, Cohen, & Huang, 2017) leveraging the power of neural nets took the first place among matting methods. Moreover, Deep Matting appears to be more robust to trimap accuracy than its competitors, since despite win with small margin on narrow trimap, it was able to significantly outperform competitors on wide trimap. Notably Closed Form (Levin, Lischinski, & Weiss, 2008) and Learning Based (Zheng & Kambhamettu, 2009) methods proposed in 2008 and 2009 respectively were able to outperform most of their more recent competitors.

References

- Wang, J., Cohen, M. F., & others. (2008). Image and video matting: a survey. Foundations and Trends in Computer Graphics and Vision, 3(2), 97–175.

- Bradley, R. A., & Terry, M. E. (1952). Rank analysis of incomplete block designs: I. The method of paired comparisons. Biometrika, 39(¾), 324–345.

- Chen, X., Bennett, P. N., Collins-Thompson, K., & Horvitz, E. (2013). Pairwise ranking aggregation in a crowdsourced setting. In Proceedings of the sixth ACM international conference on Web search and data mining - WSDM ’13. Association for Computing Machinery (ACM). http://doi.org/10.1145/2433396.2433420

- Xu, N., Price, B., Cohen, S., & Huang, T. (2017). Deep Image Matting.

- Levin, A., Lischinski, D., & Weiss, Y. (2008). A Closed-form Solution to Natural Image Matting. IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 30(2), 228–242. http://doi.org/10.1109/TPAMI.2007.1177

- Zheng, Y., & Kambhamettu, C. (2009). Learning based Digital Matting. In International Conference on Computer Vision (ICCV) (pp. 889–896). http://doi.org/10.1109/ICCV.2009.5459326